get started

We work with Planet Bluegrass, the amazing folks out of Lyons, Colorado, who have been putting on the Telluride Bluegrass Festival since 1974. They’ve resisted selling their tickets through Ticketmaster, Eventbrite,Ticketfly, or any of the corporate concert ticket sales outlets because they don’t believe in charging a fee to their concert-goers, because they do believe in giving full refunds, and because they like to support locally owned businesses like Insight Designs. Thanks!

Why would anyone need to process 100 orders per second? Well in this case the client wants to be egalitarian and have the tickets go out on a first come, first served basis. No preferential treatment is given to people who have attended the festival before or to those spending more money. The tickets go on sale at 9:00 AM MST which results in thousands of visitors hitting refresh as the clock counts down toward sale time. And then once the sale has begun, visitors try to checkout as quickly as possible.

In addition to selling high-demand tickets online, there are plenty of other examples where someone’s ecommerce store might need to process lots of orders very quickly. Black Friday, Cyber Monday, flash sales, and and high-volume website, is a candidate for getting clobbered. We’ve had clients get mentioned on the national news and then seen their sales skyrocket.

So why are we writing this post? Winston Churchill said “Success consists of going from failure to failure without loss of enthusiasm.” That’s a gentle way of acknowledging that there were years when the Telluride Bluegrass Festival online ticket sales system failed, and that Planet Bluegrass and Insight Designs have enthusiastically and tenaciously stuck with it, learning from the failures and finally arriving at a powerful, distributed, open-source solution. It’s not a perfect solution, but it smoothly processed tens of thousands of tickets in a few minutes, saving Festivarians several thousand dollars in fees that would otherwise have gone to a big corporation like Ticketmaster. The LA Times wrote an interesting article about Ticketmaster fees..

In 2016, the Magento solution that Insight Designs implemented for Planet Bluegrass got up to 27 orders a second and then came to a grinding halt when the master database started getting too many write locks. One of the downsides of a relational database, like the MySQL engine that is default for Magento, is that a table needs to be locked before an application can write to it. The time it takes for this locking and unlocking of a table can be a bottleneck to scalable sales. After a database restart, and about 10 minutes of downtime (and frustrated would-be ticket buyers) the system came back and successfully processed the remaining tickets. The 2016 Magento architecture that almost worked, consisted of:

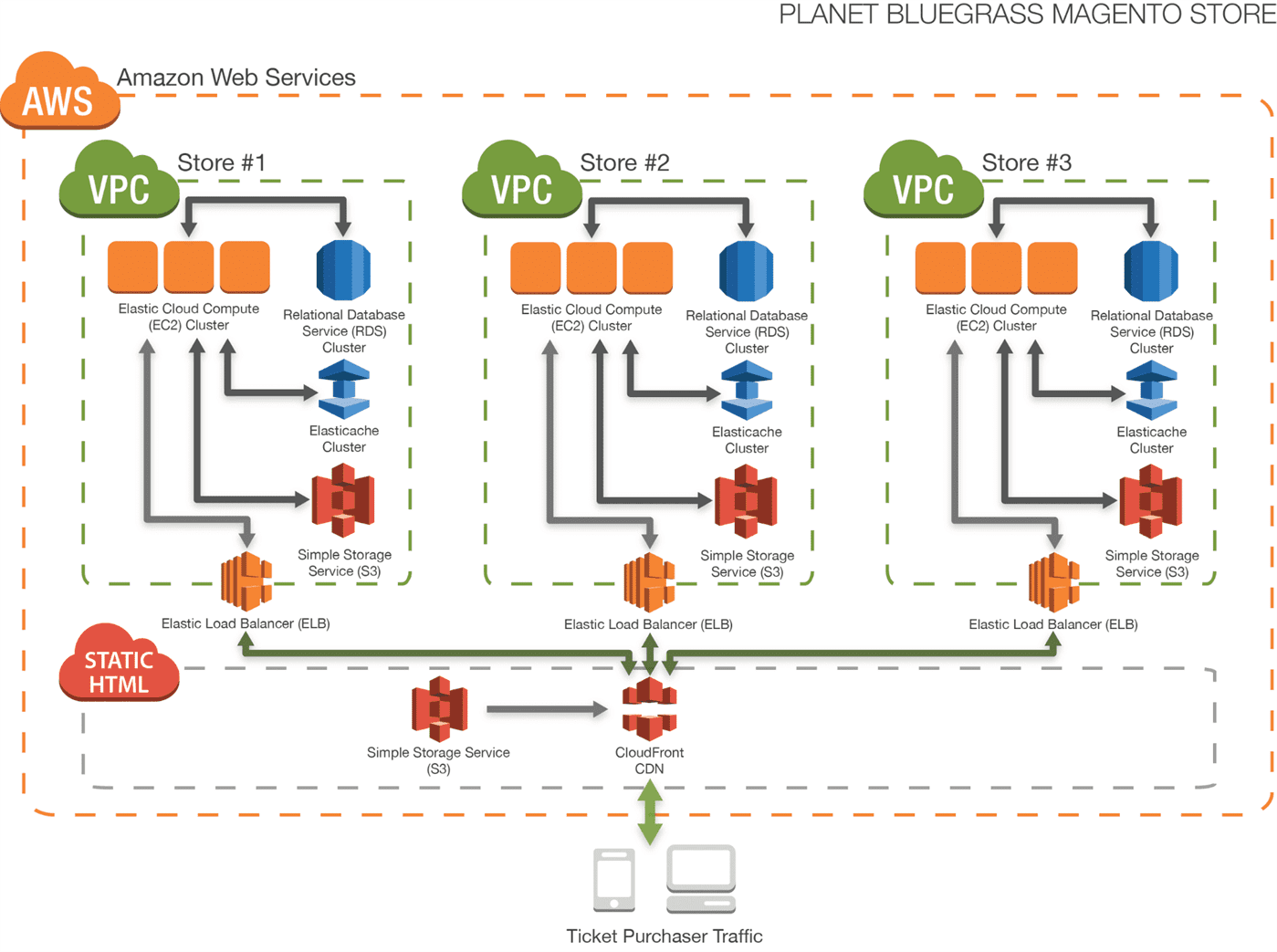

Planet Bluegrass wasn’t ready to throw in the towel and move to Ticketmaster for 2017 and instead took Churchill’s advice. Craig Ferguson, the President of Planet Bluegrass and the Telluride Bluegrass Festival Director, said “Why don’t we just have three different websites for processing tickets?” And that was it, the least technical person sitting at the conference room table had the idea that made the 2017 sale a success. The 2017 Magento architecture that worked perfectly, consisted of:

1 static cloud-hosted webpage (AWS S3 and CloudFront) to direct users one of three completely separate Magento store clusters. Users entered their email address and based on where that fit in the alphabet they were consistently sent to one of the three store clusters. Think of this as a manual load balancer. We toyed with the idea of using IP addresses, or randomly assigning people to a store, but we settled on email addresses so that the user could return from a different IP address, on a different browser, and still go to the Magento store with their account on it. Users often buy camping tickets as a separate transaction from concert tickets, perhaps an hour later from work instead of from home, and we wanted those users to be on the same Magento store.

3 Cluster stores, each with 40 xlarge EC2 instances, 1 master RDS database instance, 1 read replica RDS database instance, and 1 xlarge Redis ElastiCache node.

In addition to splitting the traffic up on three separate stores we also improved the 2016 architecture by implementing Full page caching

Customer experience is always priority number one, and the three different Magento clusters didn’t seem to negatively affect any customers. But at the end of the sale, the Planet Bluegrass customer service staff doesn’t want to manage orders on three different Magento backends. And while it’s easy to take the load balancer pools down to a single EC2 instance, because the traffic is insignificant after the sale, they’d rather just pay for one running instance instead of three. So Insight Designs wrote an import script to get the orders all imported into a single Magento admin.

Will we do this same structure again in 2018? We believe that as Amazon’s Aurora database engine matures (and maybe becomes less expensive) that having three clusters won’t be necessary, which would be welcomed. But if we have to do this again, we’re ready.

If you’re having a hard time getting Magento Community to perform well under high traffic times we hope that something above helps you out. And if you’d rather just hire someone to help you out, we’d love to hear from you!

And for full disclosure, we don’t actually know how many orders per second you can process with this architecture. It depends on how efficient your Magento application is, the size and number of your EC2 and RDS servers, whether or not you’re calling third-party APIs in the checkout process, how well your payment gateway can handle rapid transactions to the same account, etc. In looking at the performance graphs from within the Amazon AWS dashboard it looks like nothing was getting taxed and that we could have handled three times more traffic.